Module 10: Using Git with AI Tools: Automation and Code Generation

Leverage AI tools to enhance your Git workflow for test automation. Use AI for writing commit messages, generating test code reviews, creating test scripts from requirements, and automating repository maintenance. Integrate ChatGPT, GitHub Copilot, and other AI tools into your daily Git operations.

AI-Powered Commit Messages and Code Review

Why This Matters

The Problem: Time-Consuming Git Overhead

As a test automation engineer, you spend valuable time on Git-related tasks that don’t directly involve writing or running tests. Crafting descriptive commit messages, conducting thorough code reviews, maintaining repository hygiene, and translating requirements into test scripts can consume 20-30% of your workday. Meanwhile, test coverage goals remain unmet, and technical debt accumulates.

Poor commit messages like “fixed stuff” or “updates” make it impossible to track why test changes were made. Inadequate code reviews let bugs slip into test suites. Manual conversion of requirements into test cases is error-prone and tedious. These pain points compound in team environments where multiple engineers contribute to the same test repository.

When You’ll Use This Skill

Daily Git Operations: Every time you commit test code, you’ll use AI to generate clear, context-aware commit messages that explain what changed and why—automatically.

Code Review Sessions: When reviewing pull requests from teammates, AI will help identify potential issues in test scripts, suggest improvements, and ensure testing best practices are followed.

Sprint Planning: As new requirements arrive, you’ll leverage AI to jumpstart test script creation, converting user stories into executable test scaffolding in minutes rather than hours.

Repository Maintenance: During cleanup sprints or when onboarding new team members, AI will help refactor test suites, update documentation, and maintain consistency across your codebase.

Common Pain Points Addressed

- Vague commit history: AI analyzes your code changes and generates descriptive messages that future-you (and your teammates) will appreciate

- Superficial code reviews: Get automated feedback on test quality, coverage gaps, and potential maintenance issues before human review

- Requirements interpretation: Reduce the cognitive load of translating acceptance criteria into test logic

- Inconsistent coding standards: AI helps enforce patterns and conventions across your test automation framework

- Documentation lag: Automatically generate or update README files, test plans, and inline comments

Learning Objectives Overview

In this lesson, you’ll transform your Git workflow from manual and time-consuming to AI-assisted and efficient. Here’s what you’ll accomplish:

1. Integrate AI Tools with Git Workflow

You’ll start by setting up AI assistants (ChatGPT, GitHub Copilot, and CLI tools) to work seamlessly with your Git operations. We’ll configure API access, install necessary extensions, and create a personalized AI assistant configuration tailored for test automation tasks.

2. Generate Meaningful Commit Messages

You’ll learn multiple approaches to AI-powered commit message generation: using pre-commit hooks that analyze staged changes, CLI commands that generate messages on-demand, and IDE integrations that suggest messages as you code. You’ll see real examples comparing generic messages versus AI-enhanced ones.

3. Perform Automated Code Reviews

We’ll implement AI-driven code review workflows that examine test scripts for common issues: hard-coded values, missing assertions, poor exception handling, and test flakiness indicators. You’ll set up automated review comments on pull requests and learn to interpret AI feedback effectively.

4. Create Test Scripts from Requirements

You’ll practice feeding user stories and acceptance criteria to AI tools, then refining the generated test scaffolding. We’ll cover prompt engineering techniques specific to test automation, including how to specify frameworks, design patterns, and assertion strategies.

5. Automate Repository Maintenance

Finally, you’ll use AI to handle routine repository tasks: generating release notes from commit history, updating test documentation, identifying deprecated test patterns, and suggesting refactoring opportunities. You’ll create scheduled automation that keeps your repository clean and well-documented.

By the end of this lesson, you’ll have a complete AI-enhanced Git workflow that saves hours each week while improving the quality and maintainability of your test automation codebase.

Core Content

Core Content: AI-Powered Commit Messages and Code Review

Core Concepts Explained

Understanding AI-Powered Git Tools

AI-powered Git tools leverage machine learning models to analyze code changes and generate human-readable commit messages, pull request descriptions, and code review comments. These tools examine diffs, understand context, and produce meaningful documentation that follows best practices.

Key Benefits:

- Time Savings: Eliminates manual commit message writing

- Consistency: Maintains standardized message formats across teams

- Context Awareness: Analyzes actual code changes to generate relevant descriptions

- Code Quality: Provides automated code review suggestions

Popular AI Git Tools

- Commitizen with AI plugins - Interactive commit message generator

- AICommits - OpenAI-powered commit message generator

- GitHub Copilot for Pull Requests - Generates PR descriptions

- CodeRabbit - AI-powered code review bot

Installing and Configuring AI Commit Tools

Step 1: Install AICommits

AICommits is a powerful CLI tool that uses OpenAI’s GPT models to generate commit messages.

# Install AICommits globally using npm

npm install -g aicommits

# Verify installation

aicommits --version

Step 2: Configure AICommits with API Key

# Set your OpenAI API key

aicommits config set OPENAI_KEY=sk-your-api-key-here

# Configure the model (optional)

aicommits config set model=gpt-4

# Set maximum token length for messages

aicommits config set max-length=100

# Enable conventional commits format

aicommits config set type=conventional

Step 3: Install Alternative Tool - Commitizen

# Install commitizen and conventional changelog

npm install -g commitizen cz-conventional-changelog

# Initialize in your project

cd your-project

commitizen init cz-conventional-changelog --save-dev --save-exact

Using AI-Powered Commit Messages

Basic Usage with AICommits

# Make changes to your test automation code

# Example: Create a new test file

cat > tests/login.spec.js << 'EOF'

const { test, expect } = require('@playwright/test');

test('user can login with valid credentials', async ({ page }) => {

await page.goto('https://practiceautomatedtesting.com/login');

await page.fill('#username', 'testuser');

await page.fill('#password', 'password123');

await page.click('#login-button');

await expect(page).toHaveURL(/.*dashboard/);

});

EOF

# Stage your changes

git add tests/login.spec.js

# Generate AI commit message

aicommits

Output Example:

$ aicommits

◇ Analyzing changes...

◆ Generated commit message:

feat(tests): add login test with valid credentials

- Create new test spec for user authentication

- Verify navigation to dashboard after successful login

- Use practiceautomatedtesting.com login page

◇ Use this commit message? (Y/n)

Using Commitizen for Interactive Commits

# Stage your changes

git add .

# Run commitizen

git cz

# Follow the interactive prompts

? Select the type of change: (Use arrow keys)

❯ feat: A new feature

fix: A bug fix

docs: Documentation only changes

style: Changes that don't affect code meaning

refactor: Code change that neither fixes a bug nor adds a feature

perf: A code change that improves performance

test: Adding missing tests

Interactive Example:

$ git cz

? Select the type of change: test

? What is the scope: authentication

? Write a short description: add comprehensive login validation tests

? Provide a longer description:

- Test valid credentials flow

- Test invalid password handling

- Test locked account scenarios

? Are there any breaking changes? No

? Does this change affect any open issues? Yes

? Add issue references: Closes #42

Generating AI-Powered Pull Request Descriptions

Using AICommits for PR Descriptions

# Create a feature branch

git checkout -b feature/add-api-tests

# Make multiple commits with AI-generated messages

# ... make changes to API tests ...

git add tests/api/

aicommits

# Generate PR description from commits

aicommits hook install

# When pushing, generate PR description

git push origin feature/add-api-tests

Manual PR Description Generation Script

Create a helper script to generate PR descriptions:

// pr-description.js

const { execSync } = require('child_process');

const fs = require('fs');

function generatePRDescription() {

// Get commit messages from current branch

const commits = execSync('git log main..HEAD --pretty=format:"%s"')

.toString()

.split('\n');

// Get changed files

const files = execSync('git diff main --name-only')

.toString()

.split('\n')

.filter(f => f);

// Generate description

const description = `

## Changes

${commits.map(c => `- ${c}`).join('\n')}

## Modified Files

${files.map(f => `- \`${f}\``).join('\n')}

## Testing

- [ ] All tests pass locally

- [ ] New tests added for new functionality

- [ ] Manual testing completed

## Screenshots

<!-- Add screenshots if applicable -->

`.trim();

console.log(description);

// Copy to clipboard (optional)

fs.writeFileSync('.pr-description.md', description);

console.log('\n✅ PR description saved to .pr-description.md');

}

generatePRDescription();

Usage:

# Generate PR description

node pr-description.js

# Copy output to GitHub PR

cat .pr-description.md

Implementing AI Code Review in Pull Requests

Setting Up CodeRabbit (GitHub App)

graph LR

A[Create PR] --> B[CodeRabbit Analyzes]

B --> C[AI Reviews Code]

C --> D[Posts Comments]

D --> E[Developer Responds]

E --> F[Resolve/Update]

F --> G[Merge]

Steps to Install:

- Visit GitHub Marketplace:

https://github.com/marketplace/coderabbit-ai - Click “Set up a plan”

- Select repositories to enable

- Configure

.coderabbit.yamlin your repository:

# .coderabbit.yaml

reviews:

enabled: true

auto_review: true

request_changes_workflow: true

profile:

- type: "test"

pattern: "**/*.spec.js"

review_level: "strict"

checks:

- name: "test-coverage"

enabled: true

min_coverage: 80

- name: "best-practices"

enabled: true

rules:

- "no-hardcoded-credentials"

- "proper-assertions"

- "test-independence"

ignore:

- "node_modules/**"

- "coverage/**"

- "*.min.js"

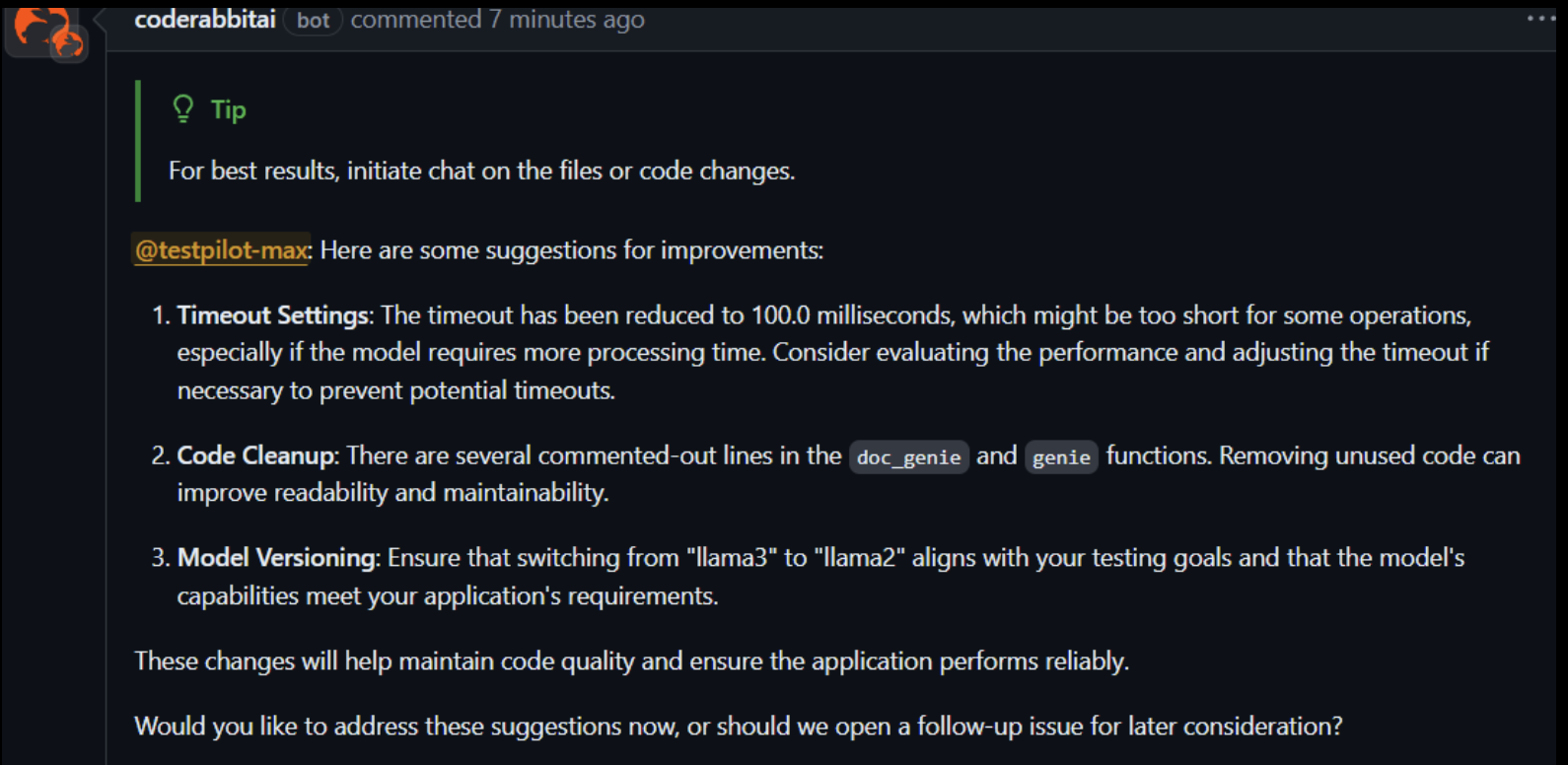

Here is an example of a code review

Creating Custom Code Review Automation

// github-review-bot.js

const { Octokit } = require('@octokit/rest');

const octokit = new Octokit({

auth: process.env.GITHUB_TOKEN

});

async function reviewPullRequest(owner, repo, pull_number) {

// Get PR files

const { data: files } = await octokit.pulls.listFiles({

owner,

repo,

pull_number

});

const comments = [];

// Review test files

for (const file of files) {

if (file.filename.includes('.spec.js')) {

const content = Buffer.from(

(await octokit.repos.getContent({

owner,

repo,

path: file.filename,

ref: file.sha

})).data.content,

'base64'

).toString();

// Check for common issues

if (!content.includes('describe(') && !content.includes('test(')) {

comments.push({

path: file.filename,

body: '⚠️ Test file missing proper test structure (describe/test blocks)',

line: 1

});

}

if (content.includes('page.waitForTimeout')) {

comments.push({

path: file.filename,

body: '🔍 Consider using waitForSelector instead of waitForTimeout for better stability',

line: content.split('\n').findIndex(l => l.includes('waitForTimeout')) + 1

});

}

if (!content.includes('expect(')) {

comments.push({

path: file.filename,

body: '❌ Test file contains no assertions. Add expect() statements.',

line: 1

});

}

}

}

// Post review

if (comments.length > 0) {

await octokit.pulls.createReview({

owner,

repo,

pull_number,

event: 'COMMENT',

comments

});

console.log(`✅ Posted ${comments.length} review comments`);

}

}

// Usage

reviewPullRequest('username', 'test-automation-repo', 123);

GitHub Actions Workflow Integration:

# .github/workflows/ai-review.yml

name: AI Code Review

on:

pull_request:

types: [opened, synchronize]

jobs:

ai-review:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Setup Node.js

uses: actions/setup-node@v3

with:

node-version: '18'

- name: Install dependencies

run: npm install @octokit/rest

- name: Run AI Review

env:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

run: |

node github-review-bot.js ${{ github.repository_owner }} \

${{ github.event.repository.name }} \

${{ github.event.pull_request.number }}

Complete Workflow Example

# 1. Create feature branch

git checkout -b feature/checkout-tests

# 2. Create test file

cat > tests/checkout.spec.js << 'EOF'

const { test, expect } = require('@playwright/test');

test.describe('Checkout Process', () => {

test('complete purchase with valid payment', async ({ page }) => {

await page.goto('https://practiceautomatedtesting.com/shop');

await page.click('[data-testid="add-to-cart"]');

await page.click('[data-testid="checkout-button"]');

await page.fill('#card-number', '4242424242424242');

await page.fill('#expiry', '12/25');

await page.fill('#cvc', '123');

await page.click('#complete-purchase');

await expect(page.locator('.success-message')).toBeVisible();

});

});

EOF

# 3. Stage and commit with AI

git add tests/checkout.spec.js

aicommits

# 4. Push and create PR

git push origin feature/checkout-tests

# 5. AI review automatically triggers

# 6. Address feedback and update

git add .

aicommits

git push

Common Mistakes and Debugging

Mistake 1: Generic or Unhelpful Commit Messages

❌ Wrong:

$ aicommits

Generated: "update files"

✅ Correct:

# Make atomic, focused commits

git add tests/login.spec.js

aicommits # "feat(auth): add login test with validation"

git add tests/logout.spec.js

aicommits # "feat(auth): add logout test with session cleanup"

Mistake 2: Not Reviewing AI-Generated Content

Always review AI-generated messages before accepting:

$ aicommits

Generated: "fix: resolve issue with test"

# This is too vague! Reject and regenerate:

n

aicommits --generate 3 # Generate 3 options to choose from

Mistake 3: Missing API Key Configuration

Error:

$ aicommits

Error: OPENAI_KEY is not set

Fix:

# Set API key

aicommits config set OPENAI_KEY=sk-your-key

# Verify configuration

aicommits config list

Mistake 4: Committing Sensitive Information

// Add pre-commit hook to prevent secrets

// .husky/pre-commit

#!/bin/sh

. "$(dirname "$0")/_/husky.sh"

# Check for potential secrets

if git diff --cached | grep -iE "(api[_-]?key|password|secret|token)" | grep -v "practiceautomatedtesting"; then

echo "⚠️ Potential secrets detected in commit!"

echo "Please review your changes."

exit 1

fi

Debugging AI Tool Issues

# Enable verbose logging

export DEBUG=aicommits:*

aicommits

# Test OpenAI API connectivity

curl https://api.openai.com/v1/models \

-H "Authorization: Bearer $OPENAI_KEY"

# Clear cache if getting stale results

rm -rf ~/.aicommits/cache

# Regenerate with different model

aicommits config set model=gpt-3.5-turbo

aicommits

Best Practices Summary

- Commit Frequently: Small, focused commits generate better AI messages

- Review Before Accepting: Always review AI-generated content

- Configure Conventions: Set up commit message conventions early

- Combine Tools: Use AI for generation, humans for validation

- Secure Credentials: Never commit API keys; use environment variables

- Test Integration: Verify CI/CD pipelines work with AI-generated messages

graph TD

A[Make Code Changes] --> B[Stage Changes]

B --> C[Run AI Commit Tool]

C --> D{Review Message}

D -->|Good| E[Accept & Commit]

D -->|Poor| F[Regenerate or Edit]

F --> C

E --> G[Push to Remote]

G --> H[AI Code Review]

H --> I[Address Feedback]

I --> J[Merge]

Hands-On Practice

EXERCISE

Hands-On Exercise: Build an AI-Powered Git Workflow Automation Tool

Task

Create a Python-based tool that automatically generates commit messages and performs code reviews using AI. Your tool should analyze code changes, generate meaningful commit messages, and provide code quality feedback.

Prerequisites

- Python 3.8+

- Git repository

- OpenAI API key (or similar AI service)

- Basic understanding of Git commands

Step-by-Step Instructions

Step 1: Set Up Your Environment

# Create a new directory for your project

mkdir ai-git-assistant

cd ai-git-assistant

# Initialize a virtual environment

python -m venv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

# Install required packages

pip install openai gitpython python-dotenv

Step 2: Create the Starter Code Structure

config.py

import os

from dotenv import load_dotenv

load_dotenv()

API_KEY = os.getenv("OPENAI_API_KEY")

MODEL = "gpt-4"

.env

OPENAI_API_KEY=your_api_key_here

Step 3: Implement the Git Diff Analyzer

Create git_analyzer.py and implement:

- Function to get staged changes using GitPython

- Function to parse diff output

- Function to identify file types and changes

Step 4: Implement the Commit Message Generator

Create commit_generator.py and implement:

- Function to analyze code changes

- Function to generate commit messages following conventions:

- Use conventional commit format (feat:, fix:, docs:, etc.)

- Include brief description

- Add bullet points for multiple changes

- Keep under 72 characters for the title

Step 5: Implement the Code Reviewer

Create code_reviewer.py and implement:

- Function to review code changes for:

- Potential bugs

- Security vulnerabilities

- Code smell

- Best practice violations

- Performance issues

- Function to generate actionable feedback

Step 6: Create the Main CLI

Create main.py:

import argparse

from git_analyzer import get_staged_changes

from commit_generator import generate_commit_message

from code_reviewer import review_code

def main():

parser = argparse.ArgumentParser(description="AI-Powered Git Assistant")

parser.add_argument("--commit", action="store_true",

help="Generate commit message")

parser.add_argument("--review", action="store_true",

help="Review staged changes")

parser.add_argument("--auto-commit", action="store_true",

help="Generate and apply commit automatically")

args = parser.parse_args()

# TODO: Implement command handling

pass

if __name__ == "__main__":

main()

Expected Outcome

Your tool should:

- Generate Commit Messages that:

- Follow conventional commit format

- Accurately describe the changes

- Include context about why changes were made

- Are concise and meaningful

- Provide Code Reviews that:

- Identify at least 3 categories of issues

- Offer specific line-by-line feedback

- Suggest improvements

- Rate code quality (1-10 scale)

- Work Seamlessly with Git:

- Analyze only staged changes

- Handle multiple file types

- Provide options for automation

Testing Your Tool

Create a test scenario:

# Initialize a test repository

git init test-repo

cd test-repo

# Create a sample file with intentional issues

cat > app.py << 'EOF'

def calculate_total(items):

total = 0

for i in items:

total = total + i

return total

password = "admin123" # hardcoded password

user_input = input("Enter data: ")

eval(user_input) # security issue

EOF

# Stage the file

git add app.py

# Test your tool

python ../main.py --review

python ../main.py --commit

Solution Approach

Key Implementation Tips:

- Git Diff Analysis:

from git import Repo

def get_staged_changes():

repo = Repo(".")

diff = repo.git.diff("--cached")

return diff

- AI Prompt Engineering:

prompt = f"""Analyze this git diff and generate a conventional commit message:

{diff}

Format: <type>(<scope>): <subject>

Include:

- Appropriate type (feat, fix, docs, refactor, etc.)

- Clear, concise description

- Why this change matters

"""

- Code Review Prompt:

review_prompt = f"""Review this code for:

1. Security vulnerabilities

2. Performance issues

3. Code quality

4. Best practices

Code diff:

{diff}

Provide specific, actionable feedback.

"""

KEY TAKEAWAYS

- AI enhances Git workflows by automating repetitive tasks like writing commit messages and conducting initial code reviews, saving developers significant time and ensuring consistency

- Effective prompt engineering is crucial for getting quality output from AI models—structured prompts with clear context, examples, and constraints produce better commit messages and code reviews

- AI-powered tools work best as assistants, not replacements—they should augment human decision-making by providing suggestions that developers can review, edit, and approve

- Integration with existing workflows matters—successful automation tools seamlessly integrate with Git, IDEs, and CI/CD pipelines without disrupting established development processes

- Quality control and validation are essential—always review AI-generated content for accuracy, implement fallback mechanisms for API failures, and maintain human oversight for critical decisions

NEXT STEPS

What to Practice

- Enhance your tool with additional features:

- Support for different AI providers (Anthropic, Cohere, local models)

- Integration with GitHub/GitLab APIs for pull request reviews

- Custom commit message templates for different project types

- Caching to reduce API calls and costs

- Experiment with prompt engineering:

- Test different prompt structures for better results

- Add few-shot examples to improve consistency

- Implement prompt templates for different scenarios

- Build a pre-commit hook that automatically runs your tool before commits

Related Topics to Explore

- Advanced Git automation: pre-commit hooks, Git hooks, and CI/CD integration

- Natural Language Processing: Understanding how to analyze and generate technical documentation

- AI model fine-tuning: Training custom models on your codebase for better context-awareness

- Static code analysis: Integrating traditional linters (pylint, ESLint) with AI reviews

- Test generation: Using AI to automatically generate unit tests for code changes

- Documentation automation: Generating README files, API docs, and inline comments with AI

Resources for Continued Learning

- Explore GitHub Copilot and other AI coding assistants

- Study conventional commit specifications in depth

- Learn about semantic versioning and automated changelog generation

- Investigate tools like Commitizen, Conventional Changelog, and semantic-release